HDL – Sample HDL files load Content Items

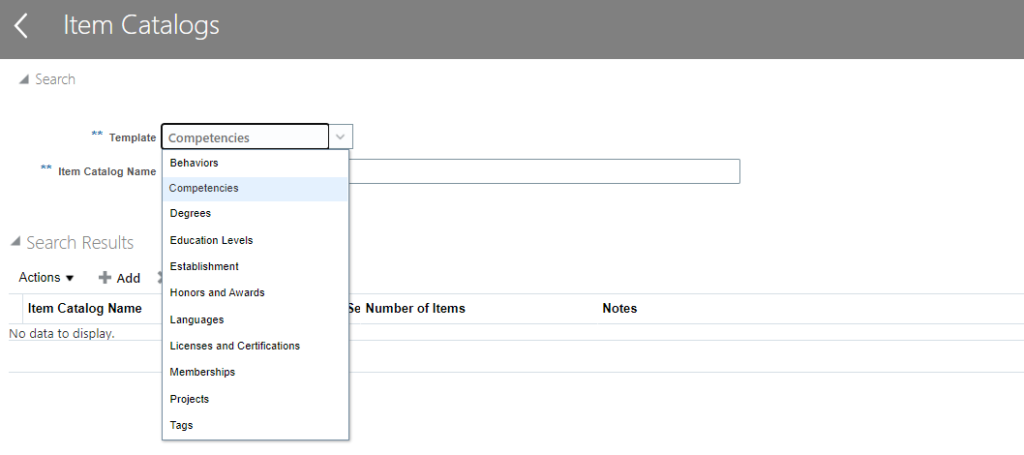

To bulk upload items catalogs in HCM profiles, you can use ContentItem.dat. Each of the template have certain mandatory attributes like Context Name, Value Set Name or Value Set Id:

S0, before you start preparing the file, you need to have below information handy:

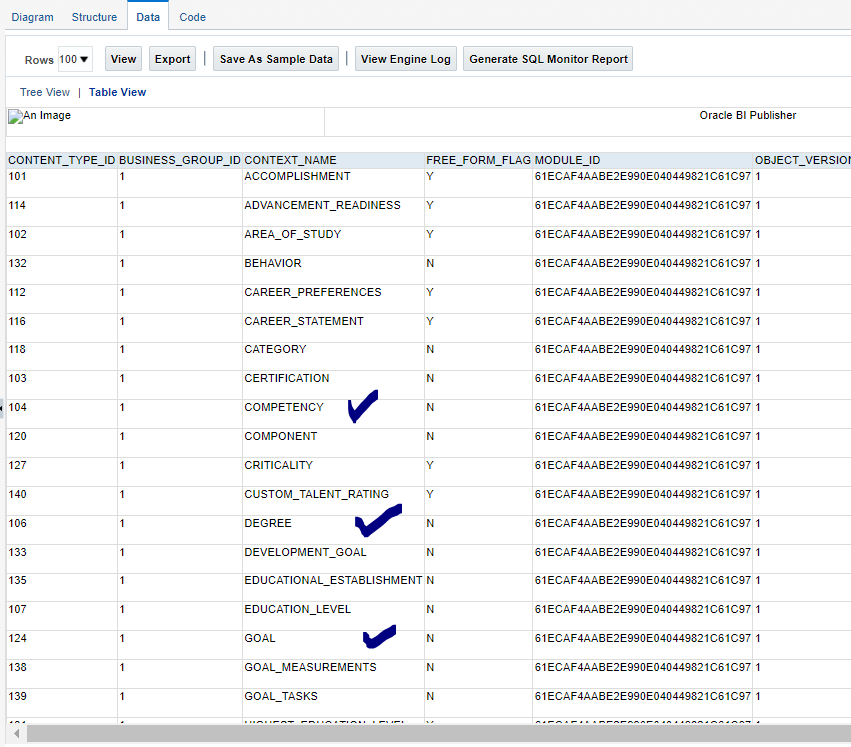

- Context Name – This is a mandatory attribute. If you don’t pass the value in your HDL file, you will get below error:

The values 3000122xxxxx aren’t valid for ContentItemValueSetId.

You can get the context name from HRT_CONTENT_TYPES_B table:

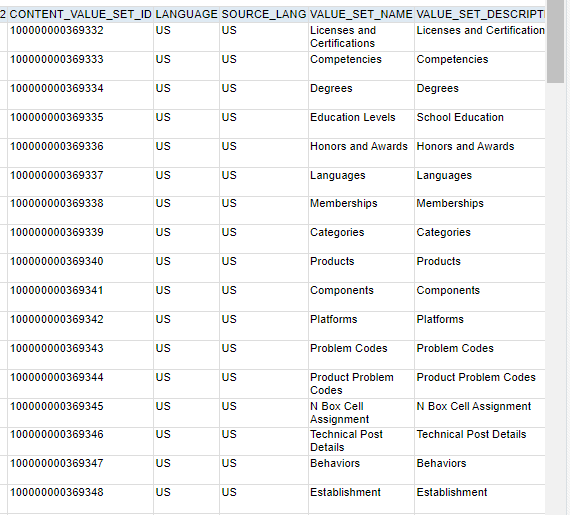

2. Content Item Value Set Name/Id: This is again a mandatory attribute. You can get the Content Value Set Name/ Id from HRT_CONTENT_TP_VALUESETS_TL table.

Once you have the details, you can prepare ContentItem.dat.

Below are the sample files for different item catalog templates:

For Establishments:

METADATA|ContentItem|Name|ContextName|ContentItemValueSetName|ContentItemValueSetId|ContentItemCode|DateFrom|DateTo|RatingModelCode|SourceSystemId|SourceSystemOwner

MERGE|ContentItem|Indian Institute of Technology, Bombay|EDUCATIONAL_ESTABLISHMENT|Establishment||IIT_B|1951/01/01|||IIT_B|HRC_SQLLOADER

MERGE|ContentItem|Indian Institute of Management, Ahemdabad|EDUCATIONAL_ESTABLISHMENT|Establishment||IIM_A|1951/01/01|||IIT_B|HRC_SQLLOADER

For Licenses and Certifications:

METADATA|ContentItem|Name|ContextName|ContentItemValueSetName|ContentItemValueSetId|ContentItemCode|DateFrom|DateTo|RatingModelCode|SourceSystemId|SourceSystemOwner

MERGE|ContentItem|Oracle Global Human Resources 2023|CERTIFICATION|Licenses and Certifications||O_GHR_2023|1951/01/01|||O_GHR_2023|HRC_SQLLOADER MERGE|ContentItem|Oracle Benefits 2023|CERTIFICATION|Licenses and Certifications||O_BEN_2023|1951/01/01|||O_BEN_2023|HRC_SQLLOADERFor Degrees:

METADATA|ContentItem|Name|ContextName|ContentItemValueSetName|ContentItemValueSetId|ContentItemCode|DateFrom|DateTo|RatingModelCode|SourceSystemId|SourceSystemOwner

MERGE|ContentItem|PhD|DEGREE|Degrees||XX_PhD|1951/01/01|||CI_XX_PhD|HRC_SQLLOADER

MERGE|ContentItem|Higher National Certificate|DEGREE|Degrees||XX_Higher National Certificate|1951/01/01|||CI_XX_Higher National Certificate|HRC_SQLLOADER For Competencies:

METADATA|ContentItem|ContextName|ContentItemValueSetName|Name|ContentItemId|ContentItemCode|DateFrom|DateTo|ItemDescription|RatingModelId|RatingModelCode|CountryGeographyCode|CountryCountryCode|SourceSystemId|SourceSystemOwner

MERGE|ContentItem|COMPETENCY|Competencies|Accounting Standards and Principles||XX_ASAP|1951/01/01||To check knowledge on Accounting Standards and Principles.|5|PROFICIENCY|||XX_ASAP|HRC_SQLLOADER

MERGE|ContentItem|COMPETENCY|Competencies|Assessing Talent||XX_AT|1951/01/01||To check knowledge on Assessing Talent.|5|PROFICIENCY|||XX_AT|HRC_SQLLOADER

MERGE|ContentItem|COMPETENCY|Competencies|Assurance and Reporting||XX_AAR|1951/01/01||To check knowledge on Assurance and Reporting.|5|PROFICIENCY|||XX_AAR|HRC_SQLLOADERFor Languages:

METADATA|ContentItem|Name|ContextName|ContentItemValueSetName|ContentItemValueSetId|ContentItemCode|DateFrom|DateTo|RatingModelCode|SourceSystemId|SourceSystemOwner

MERGE|ContentItem|Hindi|LANGUAGE|Languages||XX_Hindi|1951/01/01|||CI_Hindi|HRC_SQLLOADER

MERGE|ContentItem|Punjabi|LANGUAGE|Languages||XX_Punjabi|1951/01/01|||CI_Punjabi|HRC_SQLLOADER

Please note that Rating Mode Id is mandatory for loading competencies. You can find the rating model id and rating model code from hrt_rating_models_b table.

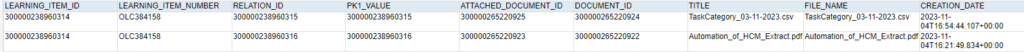

Once the data load is successful, you can run below queries to extract loaded data: