TalentProfile.dat can be used to create worker talent profiles and loading content items against the talent profiles. While some of the content items load is straight forward, loading competencies is little tricky one. This is because there are some additional fields like QualifierId1 and QualifierId2 which should be supplied to make the loaded data available on UI.

Sample HDL file to load competencies:

METADATA|ProfileItem|ProfileItemId|ProfileId|ProfileCode|ContentItemId|ContentTypeId|ContentType|CountryId|DateFrom|DateTo|RatingModelId1|RatingModelCode1|RatingLevelId1|RatingLevelCode1|RatingModelId2|RatingModelCode2|RatingLevelId2|RatingLevelCode2|SectionId|SourceSystemOwner|SourceSystemId|QualifierId1|QualifierId2|ItemDate6|ContentItem

MERGE|ProfileItem|||PERSON_112211||104|COMPETENCY||2022/02/07||300000236131123||||300000236131123|PERFORMANCE||3|300000236137110|HRC_SQLLOADER|PERSON_112211|300000236137121|100000086708123|2018/08/18|Leadership Skills

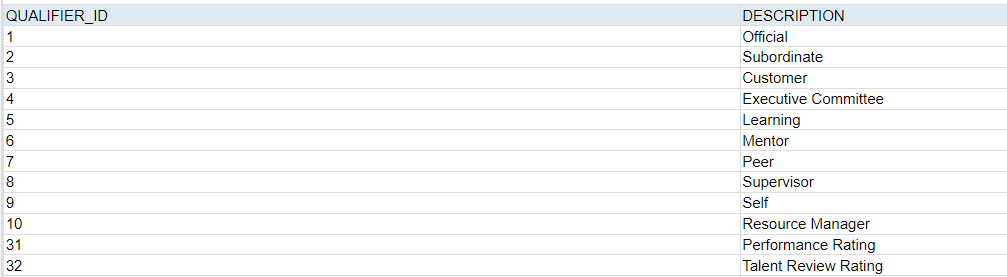

Use the below query to extract qualifiers data:

select QUALIFIER_ID,DESCRIPTION

from FUSION.HRT_QUALIFIERS_VL

where QUALIFIER_SET_ID in (

select QUALIFIER_SET_ID from fusion.HRT_QUALIFIER_SETS_VL where SECTION_ID = 300000236137110)

Sample data from above query:

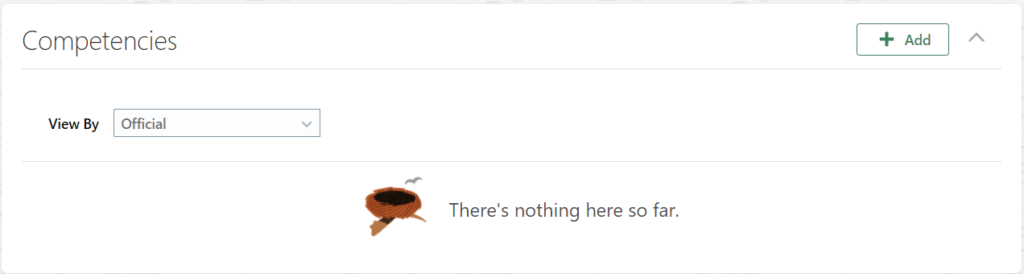

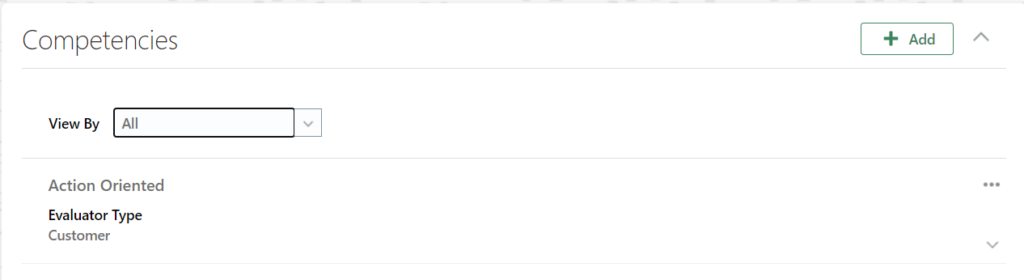

By default on UI, View By is set to “Official”:

Navigation :- Person Management -> Talent Profile

So, if you pass QualifierId1 as something different from the Id for “Official”, the loaded data will not be visible. Make sure to set “View By” to All.

Below is the high level description of some of the main attributes of ProfileItem business object:

ProfileId – profile Id of the person. If you don’t have it, pass it blank and pass the value under ProfileCode.

ProfileCode – Use this if you don’t know ProfileId (above).

DateFrom – From Date

RatingLevelCode2 – Pass values 1,2,3,4 or 5 based on your rating level (under rating model you can see).

SourceSystemId – Pass same as Profile Id or Profile Code

ItemDate6 – Review Date

ContentItem – Competency Name

QualifierId1 – Qualifier_id for an appropriate evaluator type. Can be used from the query above.

QualifierId2 – Person Id corresponding to evaluator type. For example, if using qualifier_id for Self, pass person_id of the worker in qualifierId2.