Post P2T refresh, it is mandatory to mask the actual email Ids of the users in cloned test environment. This is required to avoid any unwanted emails getting triggered to the end users.

The approach is already discussed in below post: https://fusionhcmconsulting.com/2020/12/hdl-query-to-delete-phones-data/

Use the below SQL to extract the data in HDL format:

select 'MERGE' "METADATA",

'PersonEmail' "PersonEmail",

pea.email_address_id "EmailAddressId",

pea.person_id "PersonId",

to_char(pea.date_from,'RRRR/MM/DD') "DateFrom",

to_char(pea.date_to,'RRRR/MM/DD') "DateTo",

pea.email_type "EmailType",

-->pea.email_address "1EmailAddress",

replace(replace(pea.email_address,'@','@invalid'),'.co','.xco') "EmailAddress", --> Change the format as per your requirement

(select email_hrc.source_system_id

from hrc_integration_key_map email_hrc

WHERE pea.email_Address_id = email_hrc.surrogate_id) "SourceSystemId",

(select email_hrc.source_system_owner

from hrc_integration_key_map email_hrc

WHERE pea.email_Address_id = email_hrc.surrogate_id) "SourceSystemOwner"

from per_email_addresses pea

where 1=1

and upper(email_address) like '%XXCOMPANY%' --> Change the format as per your requirement

Query with Person Number:

select 'MERGE' "METADATA",

'PersonEmail' "PersonEmail",

pea.email_address_id "EmailAddressId",

pea.person_id "PersonId",

papf.person_number "PersonNumber",

to_char(pea.date_from,'RRRR/MM/DD') "DateFrom",

to_char(pea.date_to,'RRRR/MM/DD') "DateTo",

pea.email_type "EmailType",

-->pea.email_address "1EmailAddress",

replace(replace(pea.email_address,'@','@invalid'),'.co','.xco') "EmailAddress", --> Change the format as per your requirement

(select email_hrc.source_system_id

from hrc_integration_key_map email_hrc

WHERE pea.email_Address_id = email_hrc.surrogate_id) "SourceSystemId",

(select email_hrc.source_system_owner

from hrc_integration_key_map email_hrc

WHERE pea.email_Address_id = email_hrc.surrogate_id) "SourceSystemOwner"

from per_email_addresses pea, per_all_people_f papf

where 1=1

and pea.person_id = papf.person_id

and trunc(sysdate) between papf.effective_start_date and papf.effective_end_date

and upper(email_address) like '%XXCOMPANY%' --> Change the format as per your requirement

Once the data is generated, create the HDL file and load the Worker.dat.

Post data load steps:

- Run “Send Personal Data for Multiple Users to LDAP” process. This process will sync up the latest email address from worker email to user.

- Login to Security Console and verify for couple of users from UI or you can verify from backend.

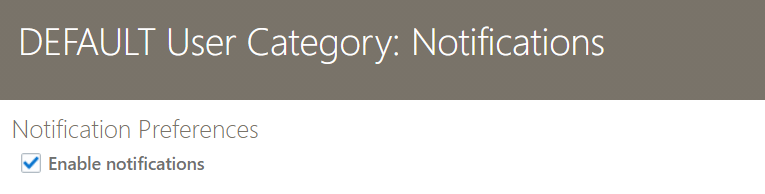

- Disable the notifications (optional) – Navigate to Security Console -> User Categories -> Notification Preferences -> Uncheck the Enable Notifications:

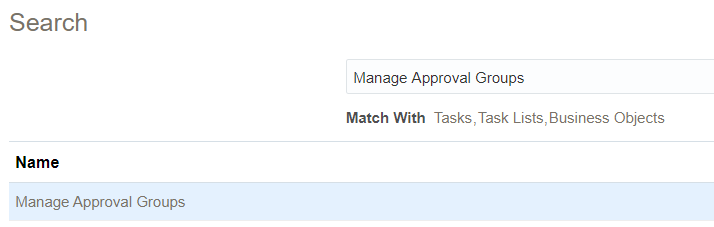

4. Or you can redirect all the notification to single email address. Search for ‘Manage Approval Groups’ task in ‘Setup and Maintenance”:

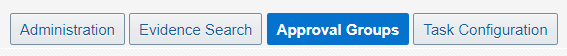

- Clicking on above task will take you to the BPM Worklist page.

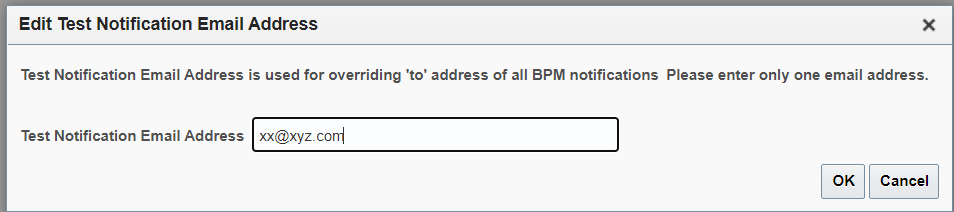

- Click on “Administration” button and search for Test Notification Email Address and provide the value where you want to redirect all your notifications:

If you want to completely purge the email data then use below post:

https://fusionhcmconsulting.com/2022/10/hdl-delete-person-email-addresses/